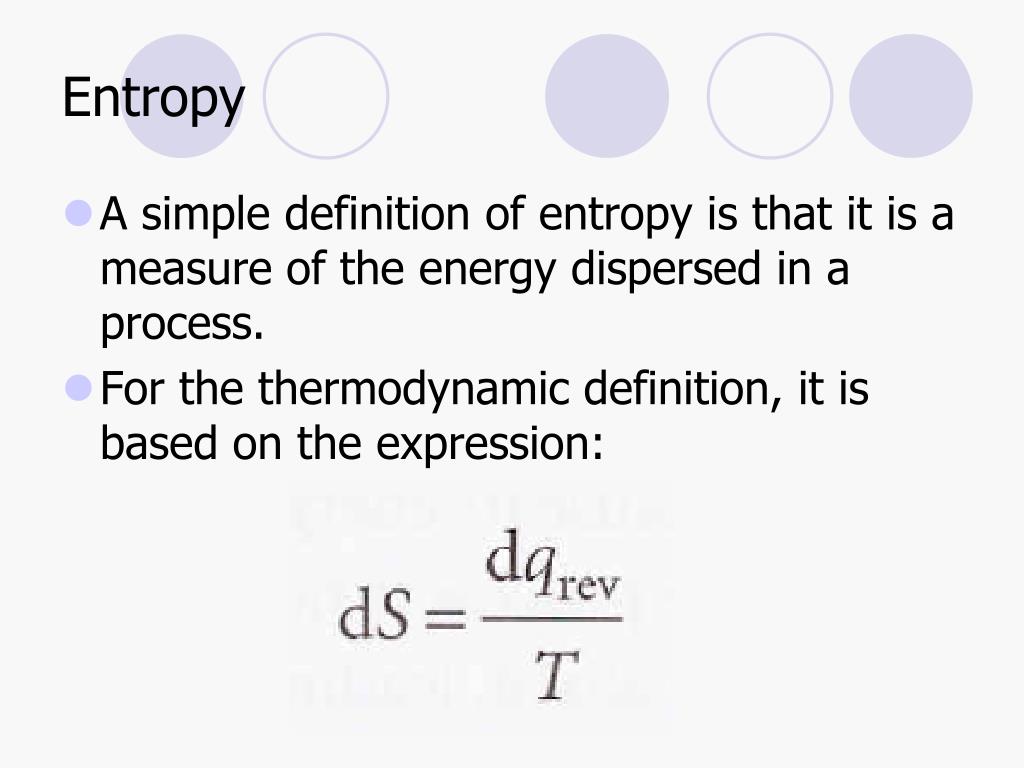

Hence, entropy always tends to increase.Īlthough all forms of energy can be used to do work, it is not possible to use the entire available energy for work. The flow of any energy is always from high to low. It measures how much energy has been dispersed in a process. Entropy can be thought of as a measure of the dispersal of energy. When a hot object is placed in the room, it quickly spreads heat energy in all directions. When water in a dish is set on a counter, it eventually evaporates, the individual molecules spreading out in the surrounding air. For instance, if a car tire is punctured, air disperses in all directions. However, we see examples of entropy in our everyday lives. Scientists have hypothesized that, long after you and I are gone, the universe will eventually reach some point of maximum disorder, at which point everything will be the same temperature, with no pockets of order (like stars and chimpanzees) to be found.Īnd if it happens, we'll have entropy to thank for it.The meaning of entropy is difficult to grasp, as it may seem like an abstract concept. Because our universe most likely started out as a singularity - an infinitesimally small, ordered point of energy - that ballooned out, and continues expanding all the time, entropy is constantly growing in our universe because there's more space and therefore more potential states of disorder for the atoms here to adopt. The information that we lose when we do this is referred to as entropy."Īnd the terrifying concept of "the heat death of the universe" wouldn't be possible without entropy. Thus, for us, it is impossible to describe the motion of each particle, so instead we do the next best thing, by defining the gas not through the motion of each particle, but through the properties of all the particles combined: temperature, pressure, total energy.

"For example, one mole of gas consists of 6 x 10 23 particles. Jaynes, interpreted entropy as information that we miss to specify the motion of all particles in a system," says Popovic. "In the 1960s, the American physicist E.T. A couple decades later, Ludwig Boltzmann (entropy's other "founder") used the concept to explain the behavior of immense numbers of atoms: even though it is impossible to describe behavior of every particle in a glass of water, it is still possible to predict their collective behavior when they are heated using a formula for entropy. In the mid-19th century, a German physicist named Rudolph Clausius, one of the founders of the concept of thermodynamics, was working on a problem concerning efficiency in steam engines and invented the concept of entropy to help measure useless energy that cannot be converted into useful work. And if there are more chimps.īut don't feel bad if you're confused: the definition can vary depending on which discipline is wielding it at the moment: Entropy concerns itself more with how many different states are possible than how disordered it is at the moment a system, therefore, has more entropy if there are more molecules and atoms in it, and if it's larger. So, if you were to look at two kitchens - one very large and stocked to the gills but meticulously clean, and another that's smaller with less stuff in it, but pretty trashed out by chimps already - it's tempting to say the messier room has more entropy, but that's not necessarily the case. Of course, the entropy depends on a lot of factors: how many chimpanzees there are, how much stuff is being stored in the kitchen and how big the kitchen is. It has more to do with how many possible permutations of mess can be made in that kitchen rather than how big a mess is possible. However, entropy doesn't have to do with the type of disorder you think of when you lock a bunch of chimpanzees in a kitchen.

#Entropy definition simple full#

It's harder than you'd think to find a system that doesn't let energy out or in - our universe is as good an example of one as we have - but entropy describes how disorder happens in a system as large as the universe or as small as a thermos full of coffee.

0 kommentar(er)

0 kommentar(er)